Historical Load and Pipeline Concurrency

Once the pipelines have been setup, they will continue to run as per the schedule. However you might want to load the historical data due to one of the many reasons including:

- Adding data to warehouse for historical analysis

- Maintaining health and quality of the warehouse

- Back dated data addition to the source

The historical load is relevant only for the incremental dedupe feeds.

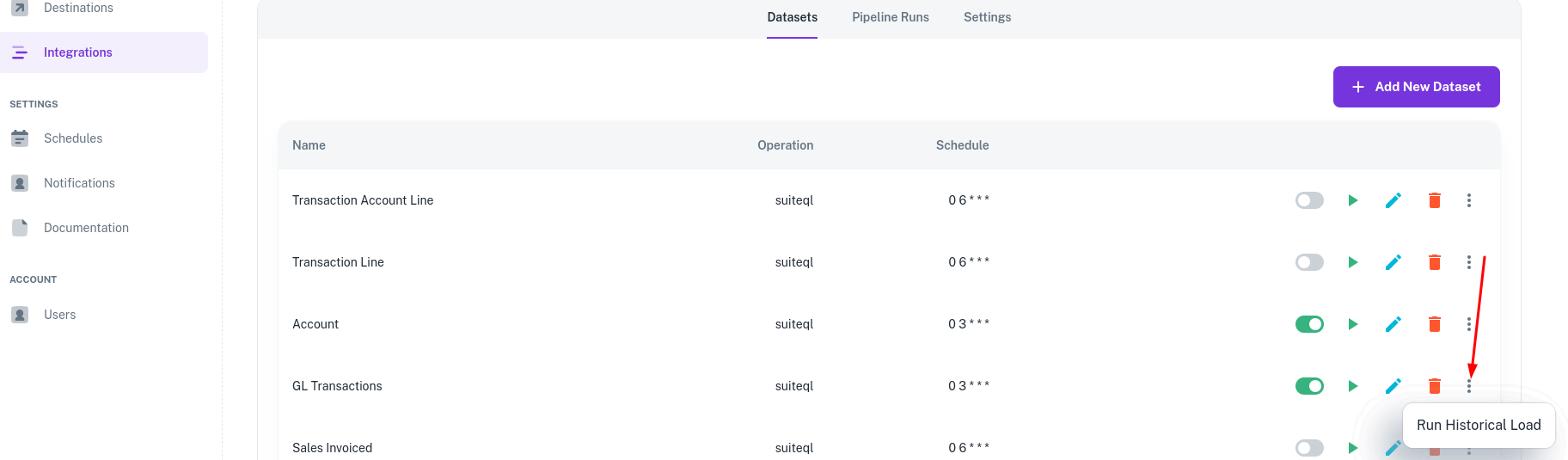

To run historical load - Navigate to the Integrations -> { Your Integration } and select your dataset.

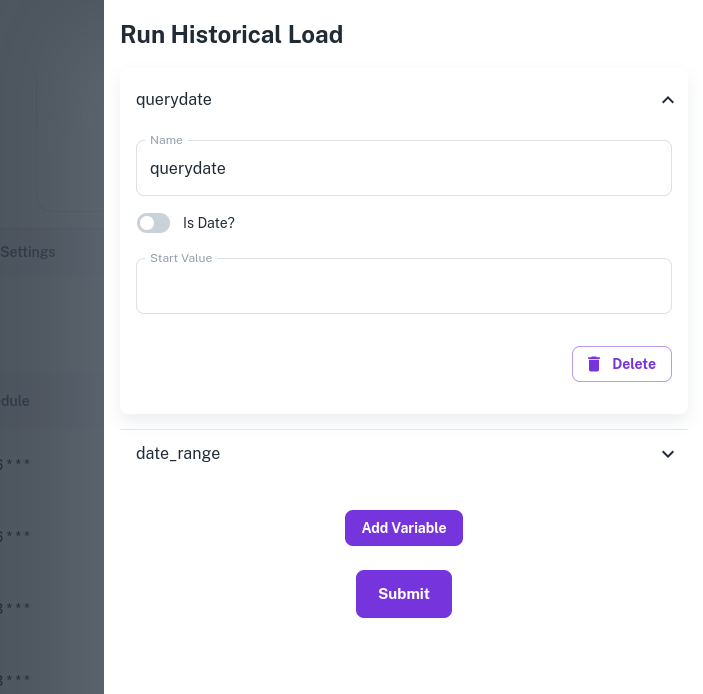

On opening the drawer you will see it populated with the Pipeline Variables. In the above example I want to run historical load by using querydate variable and given the data volume we want to load data of one day at a time.

- Select the variable

- Mark it as a date variable

- Define the start_value, end_value, skip time unit as 1 and time unit as day

- Select the time format

Doing the above will generate a date for each of the days between start_value and end_value.

Examples

Backload by a single date: For only one date variable: query_date

start_value as 01/01/2021, end_value, skip_time_unit = 1, time unit = DAY as 31/12/2021, 365 dates for each of the days of the year will be generated and a pipeline run will be submitted with each day, i.e - 01/01/2021, 02/01/2021, 03/01/2021 .....31/12/2021

365 pipeline runs will be submitted on submitting the form. Each of the pipeline run will correspond to each day.

start_value = 01/01/2021, end_value = 31/12/2021, skip_time_unit = 1, time unit = MONTH, 12 pipeline runs submitted with each having the start of the month value - 01/01/2021, 01/02/2021, 01/03/2021, .......01/12/2021

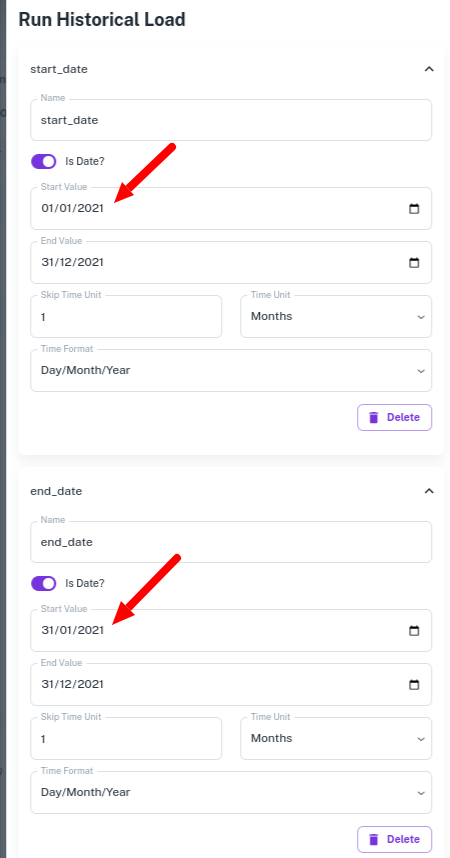

- Backload by using a date range: Let's assume we have two pipeline variables and the API fetch happens by passing a date range (i.e. two pipeline variables) - start_date, end_date. To run the historical load I want to submit 12 runs having the following combination:

{ "start_date": 01/01/2021, "end_date": 31/01/2021},

{ "start_date": 01/02/2021, "end_date": 28/02/2021},

{ "start_date": 01/03/2021, "end_date": 31/03/2021},

....

{ "start_date": 01/12/2021, "end_date": 31/12/2021}

To generate these combinations, here's the setting to provide:

Additional parameters: If you want to provide any additional parameter, let's say fetch only the active records during the historical load, please follow the following process:

Add a pipeline variable - active = true

Under Historical load, keep the is_date flag off and provide a static value. Let's consider the above example and say we provide an additional static value is_Active=true, this is how the combinations will look:

{ "start_date": 01/01/2021, "end_date": 31/01/2021, "is_active": true},

{ "start_date": 01/02/2021, "end_date": 28/02/2021, "is_active": true},

{ "start_date": 01/03/2021, "end_date": 31/03/2021, "is_active": true},

....

{ "start_date": 01/12/2021, "end_date": 31/12/2021, "is_active": true}

Points to note

- Historical Load is a resource intensive process

- You can load data for a given date range and need not run a complete sync of the source and the destination

- The schema management and evolution will take place as the data is getting loaded.

- The pipelines run with a concurrency of 1, i.e. at any given time only one pipeline of the selected dataset will run. This is to avoid the race conditions in the data.

Pipeline Concurrency

ELT Data has a default concurrency of pipelines set to 1, i.e. any any given time only one instance of a pipeline will run. This is to avoid any race conditions in the data.

Among all the concurrent runs at any given time only one run would execute while the rest would stay in queue.

In the queue ordering is not maintained and any of the waiting runs can start executing.